Page 91 - Demo

P. 91

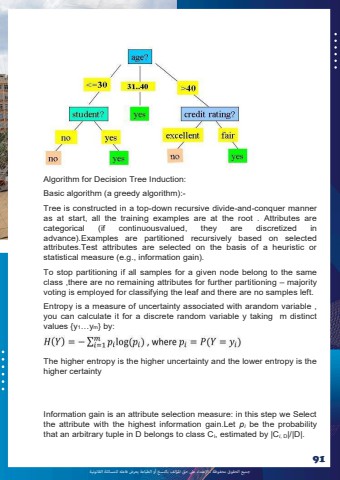

%u062c%u0645%u064a%u0639 %u0627%u0644%u062d%u0642%u0648%u0642 %u0645%u062d%u0641%u0648%u0638%u0629 %u0640 %u0627%u0625%u0644%u0639%u062a%u062f%u0627%u0621 %u0639%u0649%u0644 %u062d%u0642 %u0627%u0645%u0644%u0624%u0644%u0641 %u0628%u0627%u0644%u0646%u0633%u062e %u0623%u0648 %u0627%u0644%u0637%u0628%u0627%u0639%u0629 %u064a%u0639%u0631%u0636 %u0641%u0627%u0639%u0644%u0647 %u0644%u0644%u0645%u0633%u0627%u0626%u0644%u0629 %u0627%u0644%u0642%u0627%u0646%u0648%u0646%u064a%u062991Algorithm for Decision Tree Induction: Basic algorithm (a greedy algorithm):- Tree is constructed in a top-down recursive divide-and-conquer manner as at start, all the training examples are at the root . Attributes are categorical (if continuousvalued, they are discretized in advance).Examples are partitioned recursively based on selected attributes.Test attributes are selected on the basis of a heuristic or statistical measure (e.g., information gain). To stop partitioning if all samples for a given node belong to the same class ,there are no remaining attributes for further partitioning %u2013 majority voting is employed for classifying the leaf and there are no samples left. Entropy is a measure of uncertainty associated with arandom variable , you can calculate it for a discrete random variable y taking m distinct values {y1%u2026ym} by: The higher entropy is the higher uncertainty and the lower entropy is the higher certainty Information gain is an attribute selection measure: in this step we Select the attribute with the highest information gain.Let pi be the probability that an arbitrary tuple in D belongs to class Ci, estimated by |Ci, D|/|D|.