Page 99 - Demo

P. 99

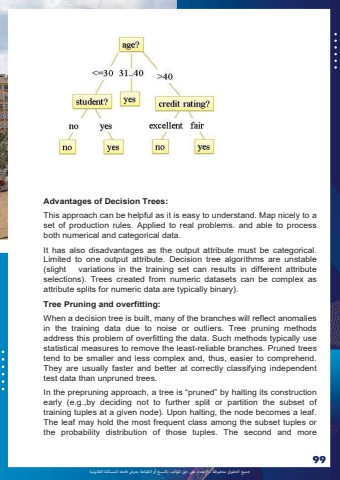

%u062c%u0645%u064a%u0639 %u0627%u0644%u062d%u0642%u0648%u0642 %u0645%u062d%u0641%u0648%u0638%u0629 %u0640 %u0627%u0625%u0644%u0639%u062a%u062f%u0627%u0621 %u0639%u0649%u0644 %u062d%u0642 %u0627%u0645%u0644%u0624%u0644%u0641 %u0628%u0627%u0644%u0646%u0633%u062e %u0623%u0648 %u0627%u0644%u0637%u0628%u0627%u0639%u0629 %u064a%u0639%u0631%u0636 %u0641%u0627%u0639%u0644%u0647 %u0644%u0644%u0645%u0633%u0627%u0626%u0644%u0629 %u0627%u0644%u0642%u0627%u0646%u0648%u0646%u064a%u062999 Advantages of Decision Trees: This approach can be helpful as it is easy to understand. Map nicely to a set of production rules. Applied to real problems. and able to process both numerical and categorical data. It has also disadvantages as the output attribute must be categorical. Limited to one output attribute. Decision tree algorithms are unstable (slight variations in the training set can results in different attribute selections). Trees created from numeric datasets can be complex as attribute splits for numeric data are typically binary). Tree Pruning and overfitting: When a decision tree is built, many of the branches will reflect anomalies in the training data due to noise or outliers. Tree pruning methods address this problem of overfitting the data. Such methods typically use statistical measures to remove the least-reliable branches. Pruned trees tend to be smaller and less complex and, thus, easier to comprehend. They are usually faster and better at correctly classifying independent test data than unpruned trees. In the prepruning approach, a tree is %u201cpruned%u201d by halting its construction early (e.g.,by deciding not to further split or partition the subset of training tuples at a given node). Upon halting, the node becomes a leaf. The leaf may hold the most frequent class among the subset tuples or the probability distribution of those tuples. The second and more