Page 105 - Demo

P. 105

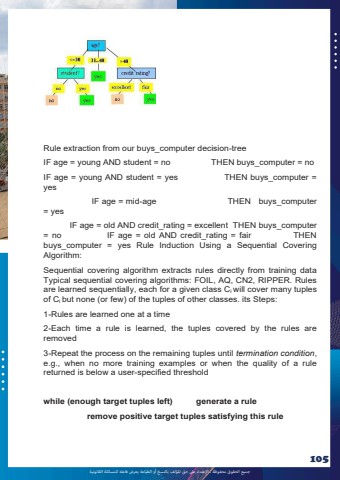

%u062c%u0645%u064a%u0639 %u0627%u0644%u062d%u0642%u0648%u0642 %u0645%u062d%u0641%u0648%u0638%u0629 %u0640 %u0627%u0625%u0644%u0639%u062a%u062f%u0627%u0621 %u0639%u0649%u0644 %u062d%u0642 %u0627%u0645%u0644%u0624%u0644%u0641 %u0628%u0627%u0644%u0646%u0633%u062e %u0623%u0648 %u0627%u0644%u0637%u0628%u0627%u0639%u0629 %u064a%u0639%u0631%u0636 %u0641%u0627%u0639%u0644%u0647 %u0644%u0644%u0645%u0633%u0627%u0626%u0644%u0629 %u0627%u0644%u0642%u0627%u0646%u0648%u0646%u064a%u0629105Rule extraction from our buys_computer decision-tree IF age = young AND student = no THEN buys_computer = no IF age = young AND student = yes THEN buys_computer = yes IF age = mid-age THEN buys_computer = yes IF age = old AND credit_rating = excellent THEN buys_computer = no IF age = old AND credit_rating = fair THEN buys_computer = yes Rule Induction Using a Sequential Covering Algorithm: Sequential covering algorithm extracts rules directly from training data Typical sequential covering algorithms: FOIL, AQ, CN2, RIPPER. Rules are learned sequentially, each for a given class Ci will cover many tuples of Ci but none (or few) of the tuples of other classes. its Steps: 1-Rules are learned one at a time 2-Each time a rule is learned, the tuples covered by the rules are removed 3-Repeat the process on the remaining tuples until termination condition, e.g., when no more training examples or when the quality of a rule returned is below a user-specified threshold while (enough target tuples left) generate a rule remove positive target tuples satisfying this rule