Page 109 - Demo

P. 109

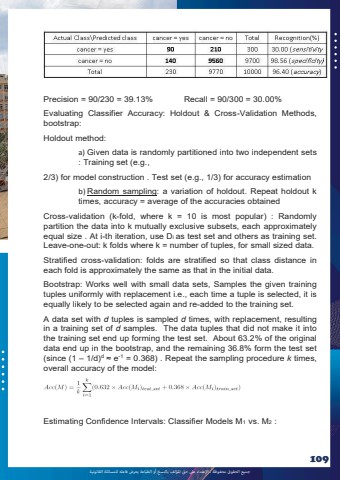

%u062c%u0645%u064a%u0639 %u0627%u0644%u062d%u0642%u0648%u0642 %u0645%u062d%u0641%u0648%u0638%u0629 %u0640 %u0627%u0625%u0644%u0639%u062a%u062f%u0627%u0621 %u0639%u0649%u0644 %u062d%u0642 %u0627%u0645%u0644%u0624%u0644%u0641 %u0628%u0627%u0644%u0646%u0633%u062e %u0623%u0648 %u0627%u0644%u0637%u0628%u0627%u0639%u0629 %u064a%u0639%u0631%u0636 %u0641%u0627%u0639%u0644%u0647 %u0644%u0644%u0645%u0633%u0627%u0626%u0644%u0629 %u0627%u0644%u0642%u0627%u0646%u0648%u0646%u064a%u0629109Precision = 90/230 = 39.13% Recall = 90/300 = 30.00% Evaluating Classifier Accuracy: Holdout & Cross-Validation Methods, bootstrap: Holdout method: a) Given data is randomly partitioned into two independent sets : Training set (e.g., 2/3) for model construction . Test set (e.g., 1/3) for accuracy estimation b) Random sampling: a variation of holdout. Repeat holdout k times, accuracy = average of the accuracies obtained Cross-validation (k-fold, where k = 10 is most popular) : Randomly partition the data into k mutually exclusive subsets, each approximately equal size . At i-th iteration, use Di as test set and others as training set. Leave-one-out: k folds where k = number of tuples, for small sized data. Stratified cross-validation: folds are stratified so that class distance in each fold is approximately the same as that in the initial data. Bootstrap: Works well with small data sets, Samples the given training tuples uniformly with replacement i.e., each time a tuple is selected, it is equally likely to be selected again and re-added to the training set. A data set with d tuples is sampled d times, with replacement, resulting in a training set of d samples. The data tuples that did not make it into the training set end up forming the test set. About 63.2% of the original data end up in the bootstrap, and the remaining 36.8% form the test set (since (1 %u2013 1/d)d %u2248 e-1 = 0.368) . Repeat the sampling procedure k times, overall accuracy of the model: Estimating Confidence Intervals: Classifier Models M1 vs. M2 :