Page 108 - Demo

P. 108

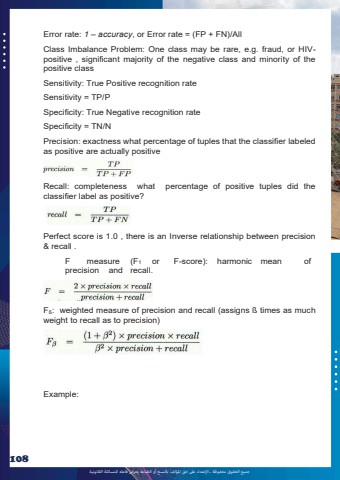

%u062c%u0645%u064a%u0639 %u0627%u0644%u062d%u0642%u0648%u0642 %u0645%u062d%u0641%u0648%u0638%u0629 %u0640 %u0627%u0625%u0644%u0639%u062a%u062f%u0627%u0621 %u0639%u0649%u0644 %u062d%u0642 %u0627%u0645%u0644%u0624%u0644%u0641 %u0628%u0627%u0644%u0646%u0633%u062e %u0623%u0648 %u0627%u0644%u0637%u0628%u0627%u0639%u0629 %u064a%u0639%u0631%u0636 %u0641%u0627%u0639%u0644%u0647 %u0644%u0644%u0645%u0633%u0627%u0626%u0644%u0629 %u0627%u0644%u0642%u0627%u0646%u0648%u0646%u064a%u0629108Error rate: 1 %u2013 accuracy, or Error rate = (FP + FN)/All Class Imbalance Problem: One class may be rare, e.g. fraud, or HIVpositive , significant majority of the negative class and minority of the positive class Sensitivity: True Positive recognition rate Sensitivity = TP/P Specificity: True Negative recognition rate Specificity = TN/N Precision: exactness what percentage of tuples that the classifier labeled as positive are actually positive Recall: completeness what percentage of positive tuples did the classifier label as positive? Perfect score is 1.0 , there is an Inverse relationship between precision & recall . F measure (F1 or F-score): harmonic mean of precision and recall.F%u00df: weighted measure of precision and recall (assigns %u00df times as much weight to recall as to precision) Example: